Adversarial Vandal

Drawing on top of art pieces to fool a classification network.

Differentiable rasterizer was proposed in painterly rendering.

Disclaimer: This is a project I did for IFT 6756: Game Theory and ML

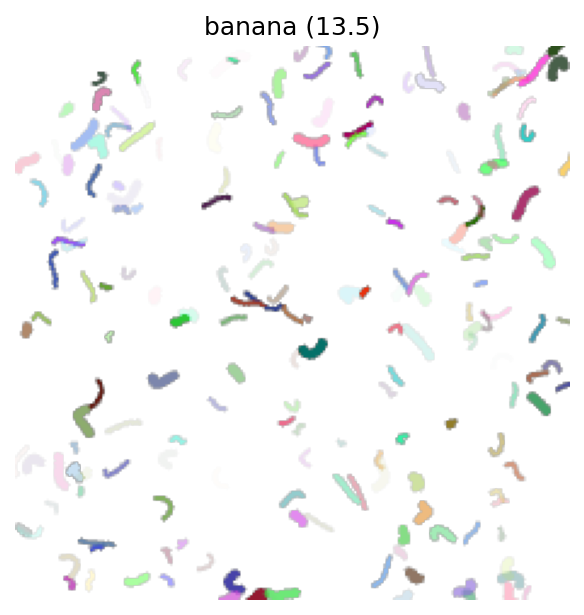

Can we create adversarial examples using this approach? We fix the number of curves, initialize them at random, and then update for curve parameters using gradient from classification network (pretrained Inception-V3 in our case). The algorithm is similar: rasterize curves, compute classification score with pretrained network, make a gradient step towards maximizing the target class (banana in our case) in curve parameters, repeat. This is known as adversarial example and we use the simples white box attack (since we have direct access to classification system). There are other types of attacks (algorithms of creation adversarial examples), we use the simplest one.

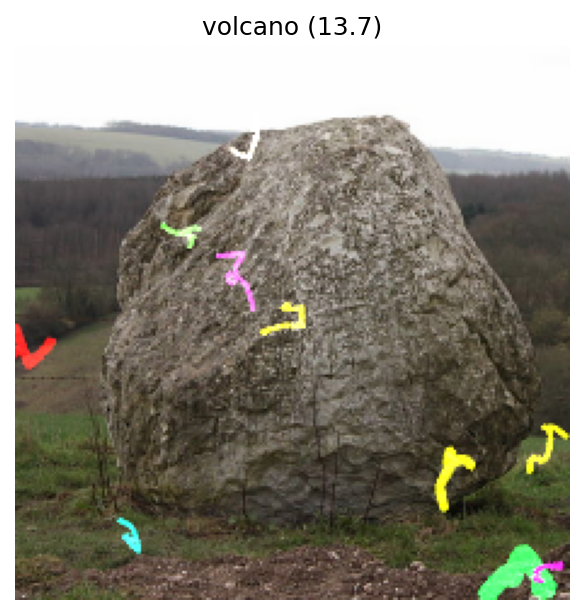

Now what if we first do some “painterly rendering” steps on randomly initialized images, and then run adversarial examples pipeline? We hope to achieve some meaning in resulting image.

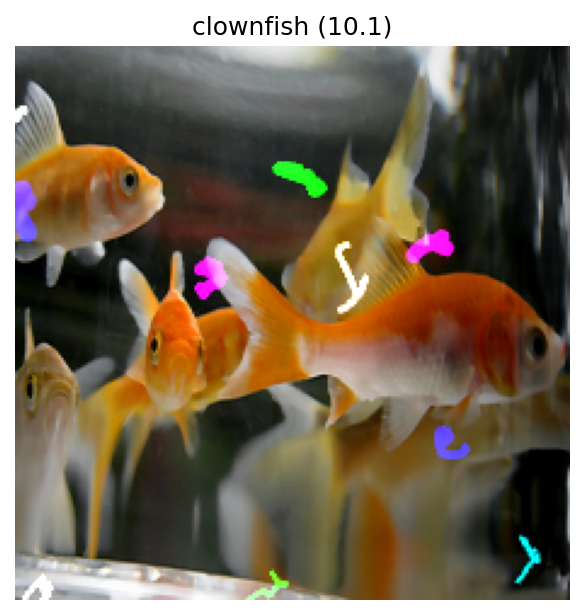

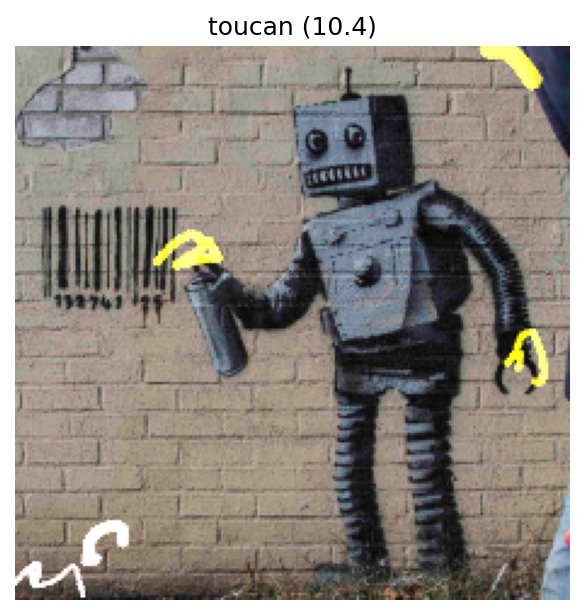

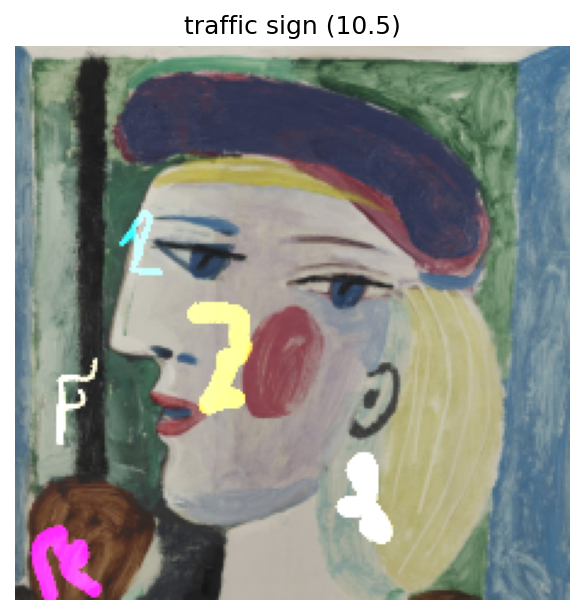

Finally, let’s draw curves on top of raster image aiming to create adversarial examples. I call this adversarial vandalism as we destroy the original work by small colored strokes on top.

Upd: A bit of inspiration in my blogpost