Reconstruction of Machine-Made Shapes from Bitmap Sketches

Ivan Puhachov\(^{1,3}\), Cedric Martens\(^1\), Paul G. Kry\(^{2,3}\), Mikhail Bessmeltsev\(^1\)

\(^1\) Université de Montréal

\(^2\) McGill University

\(^3\) Huawei Technologies, Canada

SIGGRPAPH Asia 2023

Abstract

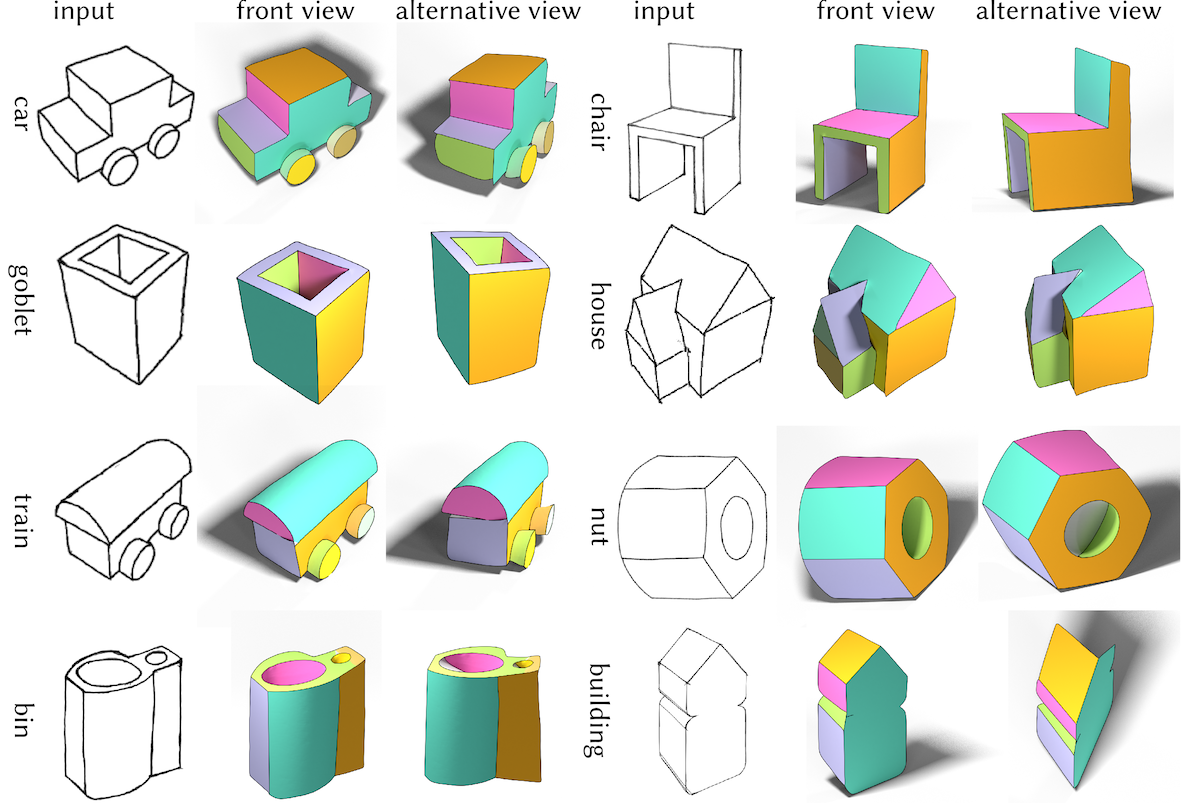

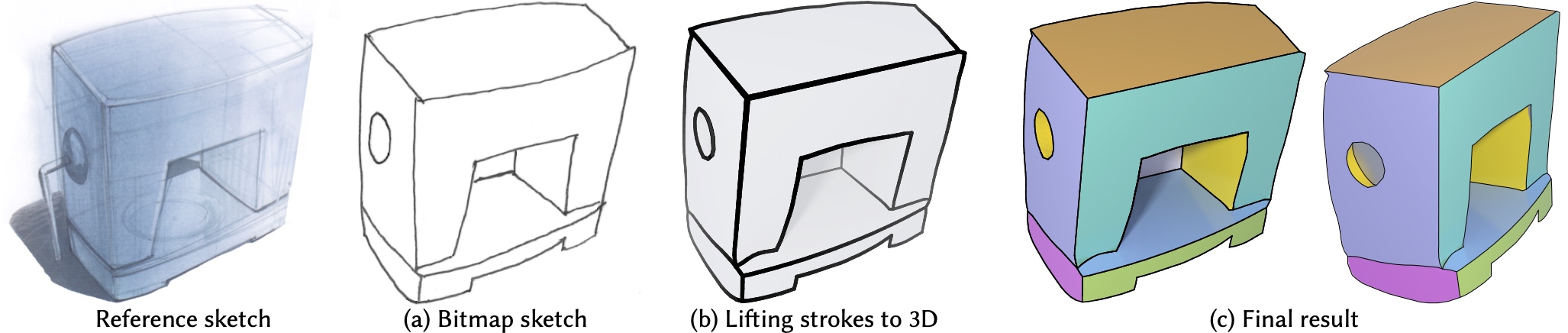

We propose a method of reconstructing 3D machine-made shapes from bitmap sketches by separating an input image into individual patches and jointly optimizing their geometry.

We rely on two main observations:

(1) human observers interpret sketches of man-made shapes as a collection of simple geometric primitives, and

(2) sketch strokes often indicate occlusion contours or sharp ridges between those primitives.

Using these main observations we design a system that takes a single bitmap image of a shape, estimates image depth and segmentation into primitives with neural networks, then fits primitives to the predicted depth while determining occlusion contours and aligning intersections with the input drawing via optimization.

Unlike previous work, our approach does not require additional input, annotation, or templates, and does not require retraining for a new category of man-made shapes. Our method produces triangular meshes that display sharp geometric features and are suitable for downstream applications, such as editing, rendering, and shading.

Citation

@article{Puhachov2023ReconMMSketch,

author = {Ivan Puhachov and Cedric Martens and Paul Kry and Mikhail Bessmeltsev},

title = {Reconstruction of Machine-Made Shapes from Bitmap Sketches},

journal = {ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia)},

volume = {42}, number = {6}, year = {2023}, month = dec,

doi = {10.1145/3618361}

}